AI Amplifies Misinformation: The Rise of Gish Galloping in Politics

Locale: Singapore, SINGAPORE

Artificial Intelligence, Misinformation, and the New Era of Political Persuasion

(A comprehensive summary of the Channel NewsAsia commentary article – “Artificial Intelligence, misinformation and political persuasion: Gish galloping and the need for regulatory frameworks”)

1. The Stakes of AI‑Generated Misinformation

The commentary opens by framing artificial intelligence as both a powerful ally and a formidable threat to democratic discourse. Modern generative models—especially large language models (LLMs) and diffusion‑based image generators—can now produce text, audio, and video that are virtually indistinguishable from human‑created content. When this capability is wielded without oversight, the result is a deluge of misinformation that can be tailored to exploit individual biases, manipulate public sentiment, and shape election outcomes.

The article emphasizes that AI does not simply repeat pre‑existing falsehoods; it can invent plausible narratives, “hallucinate” facts, and even synthesize entire political speeches. The scale of the problem is illustrated by a concept known as “Gish galloping.” Borrowed from science‑skepticism tactics, Gish galloping refers to the strategy of flooding a discussion with an overwhelming volume of disparate claims, making it difficult for rational critique to keep pace. AI systems can accelerate this tactic by producing thousands of misinformation snippets at a fraction of the time it would take a human.

2. Political Persuasion in the AI Age

Political campaigns increasingly rely on data‑driven micro‑targeting. The article details how AI tools can now analyze vast troves of social‑media data, online search histories, and demographic information to craft hyper‑personalized messages. These messages can be delivered via text, images, or video, and can be dynamically adjusted in real‑time based on audience reactions.

Key points highlighted include:

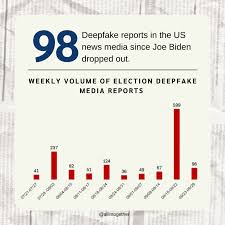

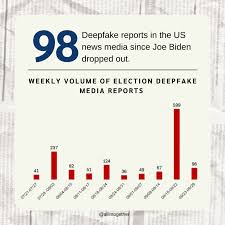

Deepfakes and “Synthetic Media.” AI‑generated videos or audio clips of public figures saying things they never did can undermine trust in political institutions. The commentary cites recent European court rulings that treat synthetic political content as potentially defamatory, underscoring the legal gray area surrounding deepfakes.

Automated Bot Networks. Machine‑learning algorithms can orchestrate coordinated bot campaigns that amplify political slogans, drown out dissenting voices, or seed fake grassroots movements. The article points to studies that show how AI‑driven bots can create the illusion of mass support for a policy, thereby influencing policy‑making.

Narrative Engineering. AI can “engineer” narratives by combining seemingly unrelated facts to create compelling but false stories. When these narratives are disseminated through algorithmic recommendation engines, they can reach millions before any fact‑checking mechanism intervenes.

The commentary also references internal links to recent case studies, such as the 2023 “Political Persuasion Index” released by a European NGO, which ranked AI‑powered political persuasion tactics among the most concerning threats to electoral integrity.

3. Regulatory Landscape and Emerging Safeguards

Recognizing the magnitude of the threat, the article examines the regulatory responses underway in several jurisdictions.

3.1 European Union

The EU’s forthcoming Artificial Intelligence Act is highlighted as a pioneering framework that categorises AI systems by risk level. Under this act, “high‑risk” AI applications—such as those used for political persuasion—would face strict oversight, mandatory audits, and explicit labeling requirements. The article notes that the act has already prompted EU member states to revise their election‑law frameworks to incorporate AI‑specific provisions.

3.2 United States

In the U.S., the commentary points out the fragmented policy landscape, where federal agencies have issued limited guidance on AI‑generated political content. The article references a recent bipartisan bill in the House that would require platforms to disclose when a piece of content is AI‑generated, and to provide users with a fact‑check flag. However, critics argue that without a clear definition of “AI‑generated content,” enforcement will be weak.

3.3 Singapore and the Greater Bay Area

Singapore’s AI Governance Framework is mentioned as a model that balances innovation with risk mitigation. The commentary notes that Singapore has mandated the disclosure of AI usage in political messaging and has established an independent oversight board. The Greater Bay Area, a cluster of cities in southern China, has similarly begun drafting local AI guidelines that require content creators to label synthetic media.

3.4 Platform‑Level Measures

The article reviews internal links to the latest policy changes by major social media platforms. For instance, Facebook’s Political Persuasion Policy now prohibits the use of AI‑generated deepfakes in political ads. Twitter’s new “Verified AI‑Generated” badge indicates when a tweet is produced by a machine. The commentary stresses that while these measures are steps forward, they rely heavily on self‑regulation and can be circumvented by sophisticated actors.

4. The Path Forward: Ethics, Transparency, and Public Literacy

The piece concludes by arguing that regulation alone cannot neutralise the risks posed by AI. Instead, a multi‑pronged approach is necessary:

- Technical Counter‑measures. Development of AI‑based fact‑checking tools that can flag hallucinations and synthetic media in real time.

- Legal Recourse. Strengthening defamation and election‑law statutes to cover AI‑generated political content.

- Public Education. Promoting digital literacy programmes that teach citizens how to spot deepfakes and recognize algorithmically amplified misinformation.

- Industry Collaboration. Encouraging AI developers, platforms, and policymakers to share threat intelligence and best practices.

The commentary ends with a stark reminder: “AI is no longer a laboratory curiosity; it has become an instrument of political persuasion that can reach the electorate faster and more efficiently than any human could.” The authors call for urgent, coordinated action to safeguard the integrity of public discourse while still enabling the constructive use of AI technologies.

5. Key Takeaways

- Scale and Speed. AI can generate massive volumes of misinformation, making “Gish galloping” tactics more potent.

- Targeted Persuasion. Machine learning enables hyper‑personalized political messaging that can sway voter attitudes.

- Regulatory Gaps. Current laws are uneven; the EU’s AI Act stands out as a comprehensive model, but U.S. policy remains fragmented.

- Platform Responsibility. Big tech firms are beginning to implement AI‑content labeling, yet enforcement and transparency remain challenges.

- Holistic Response Needed. Technical, legal, educational, and collaborative measures must converge to mitigate AI‑driven misinformation.

The commentary’s thorough examination of AI’s dual role—as a catalyst for innovation and a vehicle for political manipulation—offers a sobering look at the future of democratic communication. It underscores that safeguarding electoral integrity in an age of synthetic media will require proactive, collaborative, and adaptive strategies, lest the very tools meant to enlighten us instead distort the facts that underpin our collective decision‑making.

Read the Full Channel NewsAsia Singapore Article at:

[ https://www.channelnewsasia.com/commentary/artificial-intelligence-misinformation-political-persuasion-gish-galloping-5584191 ]